Unity: AR Foundation Remote 2.0

A downloadable tool

In simple words: AR Foundation Remote 2.0 = Unity Remote + AR Foundation + Input System (New) + So Much More.

💡 Current workflow with AR Foundation 💡

1. Make a change to your AR project.

2. Build project to a real AR device.

3. Wait for the build to complete.

4. Wait a little bit more.

5. Test your app on a real device using only Debug.Log().

🔥 Improved workflow with AR Foundation Remote 2.0 🔥

1. Setup the AR Companion app once. The setup process takes less than a few minutes.

2. Just press play! Run and debug your AR app with full access to scene hierarchy and all object properties right in the Editor!

⚡ Features ⚡

• Precisely replicates the behavior of a real AR device in Editor.

• Extensively tested with ARKit and ARCore.

• Plug-and-play: no additional scene setup is needed, just run your AR scene in Editor with AR Companion running. Extensively tested with scenes from AR Foundation Samples repository.

• Streams video from Editor to real AR device so you can see how your app looks on it without making a build (see Limitations).

• Multi-touch input remoting: stream multi-touch from AR device or simulate touch using a mouse in Editor (see Limitations).

• Location Services remoting: test GPS right in the Editor.

• Written in pure C# with no third-party libraries or native code. Adds no performance overhead in production. Full source code is available.

• Connect any AR Device to Windows PC or macOS via Wi-Fi: iOS + Windows PC, Android + macOS... any variation you can imagine!

• Compatible with Wikitude SDK Expert Edition.

🎥 Session Recording and Playback 🎥

Session Recording and Playback feature will allow you to record AR sessions to a file and play them back in the reproducible environment (see Limitations).

• Record and playback all supported features: face tracking, image tracking, plane tracking, touch input, you name it!

• Fix bugs that occur only under some specific conditions. Playing a previously recorded AR session in the reproducible environment will help you track down and fix bugs even faster!

• Record testing scenarios for your AR app. Your testers don't have to fight over testing devices ever again: record a testing scenario once, then play it back as many times as you want without an AR device.

⚓️ ARCore Cloud Anchors ⚓️

Testing ARCore Cloud Anchors don't have to be that hard. With a custom fork adapted to work with the AR Foundation Remote 2.0, you can run AR projects with ARCore Cloud Anchors right in the Unity Editor (see Limitations).

• Host Cloud Anchors.

• Resolve Cloud Anchors.

• Record an AR session with ARCore Cloud Anchors and play it back in the reproducible environment.

🕹 Input System (New) support 🕹

Version 2.0 brings Input System (New) support with all benefits of input events and enhanced touch functionality.

• Input Remoting allows you to transmit all input events from your AR device back to the Editor. Test Input System multi-touch input right in the Editor!

• Test Input Actions right in the Editor without making builds.

• Record all Input System events to a file and play them back in the reproducible environment. Again, all supported features can be recorded and played back!

⚡ Supported AR subsystems ⚡

• ARCore Cloud Anchors: host and resolve ARCore Cloud Anchors.

• Meshing (ARMeshManager): physical environment mesh generation, ARKit mesh classification support.

• Occlusion (AROcclusionManager): ARKit depth/stencil human segmentation, ARKit/ARCore environment occlusion (see Limitations).

• Face Tracking: face mesh, face pose, eye tracking, ARKit Blendshapes.

• Body Tracking: ARKit 2D/3D body tracking, scale estimation.

• Plane Tracking: horizontal and vertical plane detection, boundary vertices, raycast support.

• Image Tracking: supports mutable image library and replacement of image library at runtime.

• Depth Tracking (ARPointCloudManager): feature points, raycast support.

• Camera: camera background video (see Limitations), camera position and rotation, facing direction, camera configurations.

• Camera CPU images: ARCameraManager.TryAcquireLatestCpuImage(), XRCpuImage.Convert(), XRCpuImage.ConvertAsync() (see Limitations).

• Anchors (ARAnchorManager): add/remove anchors, attach anchors to detected planes.

• Session subsystem: Pause/Resume, receive Tracking State, set Tracking Mode.

• Light Estimation: Average Light Intensity, Brightness, and Color Temperature; Main Light Direction, Color, and Intensity; Exposure Duration and Offset; Ambient Spherical Harmonics.

• Raycast subsystem: raycast against detected planes and cloud points (see Limitations).

• Object Tracking: ARKit object detection after scanning with scanning app (see Limitations).

• ARKit World Map: full support of ARWorldMap. Serialize the current world map, deserialize the saved world map and apply it to the current session.

| Status | Released |

| Category | Tool |

| Rating | Rated 5.0 out of 5 stars (1 total ratings) |

| Author | Kyrylo Kuzyk |

Purchase

In order to download this tool you must purchase it at or above the minimum price of $150 USD. You will get access to the following files:

Comments

Log in with itch.io to leave a comment.

Hello esteemed creator. I wanted to ask you a question because I don't see anything on the asset page about licensing. About the unitypackage file that comes when I download AR Foundation Remote, would it be possible to share it with my students are working on the AR Project? I'm afraid this might be a rude question.

Please feel free to respond at your convenience, Thank you!

Hi,

The licensing for students is managed on a case-by-case basis. Please get in touch with me via email, and I'll provide more info: kuzykkirill@gmail.com

thanks for your positive reply

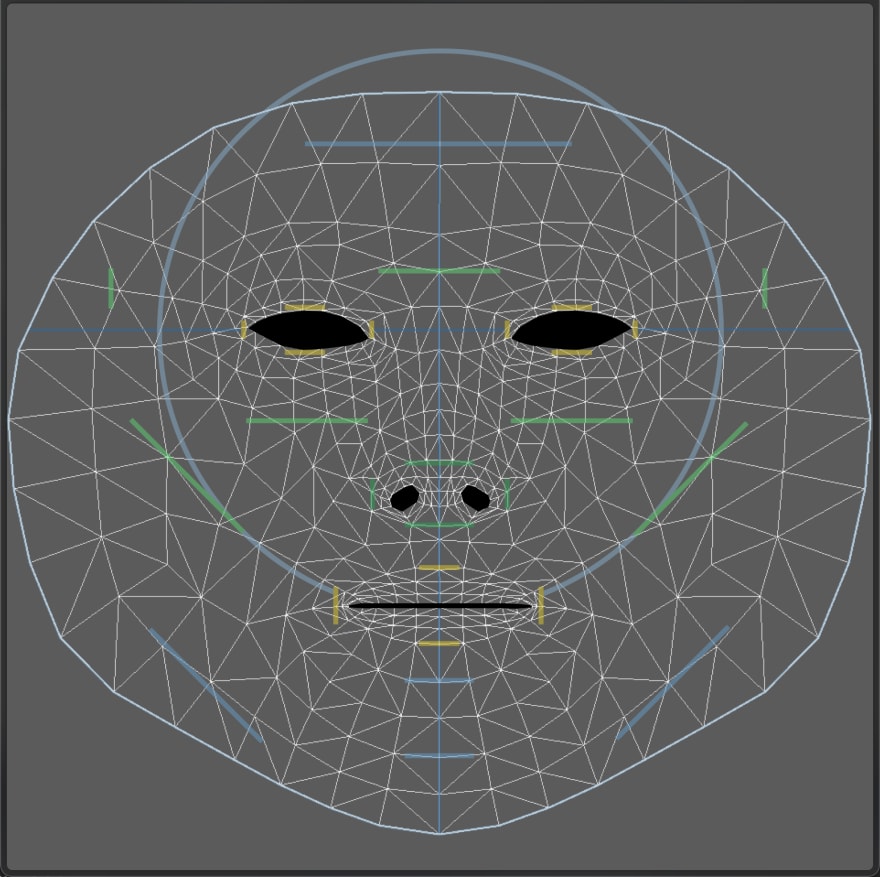

Sir tell me one more think ARcore provide this mesh to create own texture on user face i have attached image

So sir ARkit provide any texture like this if yes please share please

Unfortunately, I'm not aware of a similar texture for ARKit.

Face Mesh Example not properly work. Means faceMesh don't show user hole like eyes,mouth ,nose not properly work . please resolve this example i want this one .

thanks

This is expected behavior because this is not supported by AR Core. Only ARKit supports holes in mesh for eyes and mouse.

please fix the ambiguous reference of jetbrain notnull and system notnull. Can't even install it

The beta version of Unity 2021.2 is not currently supported, please install a stable version of Unity 2019.2 or newer.

I fixed the NotNull attribute error internally, but, unfortunately, this is not enough to support Unity 2021.2: data serialization/deserialization is not working properly in this version.